13 KiB

+++ title = "Glome-ing the Gossip, part 1: nebkor-maelstrom" slug = "glome-ing-pt1" date = "2024-06-11" [taxonomies] tags = ["software", "rnd", "sundry", "proclamation", "rust", "distributed-systems"] +++

nebkor-maelstrom, a simple crate for writing Maelstrom clients

Recently, I started working my way through the Gossip Glomers distributed system challenges1, and as usual wanted to use Rust to do it. There are a few off-the-shelf crates available for this, but none of them quite hit the spot for me. As a result, I wrote and released a new crate for writing Maelstrom clients, nebkor-maelstrom. This post is going to talk about that; there's a follow-up post coming soon that will go into my solutions using it.

Warning: seriously niche content.

Why write a new framework?

First off, what exactly is Maelstrom? From the front page of the Gossip Glomers, it's a platform that:

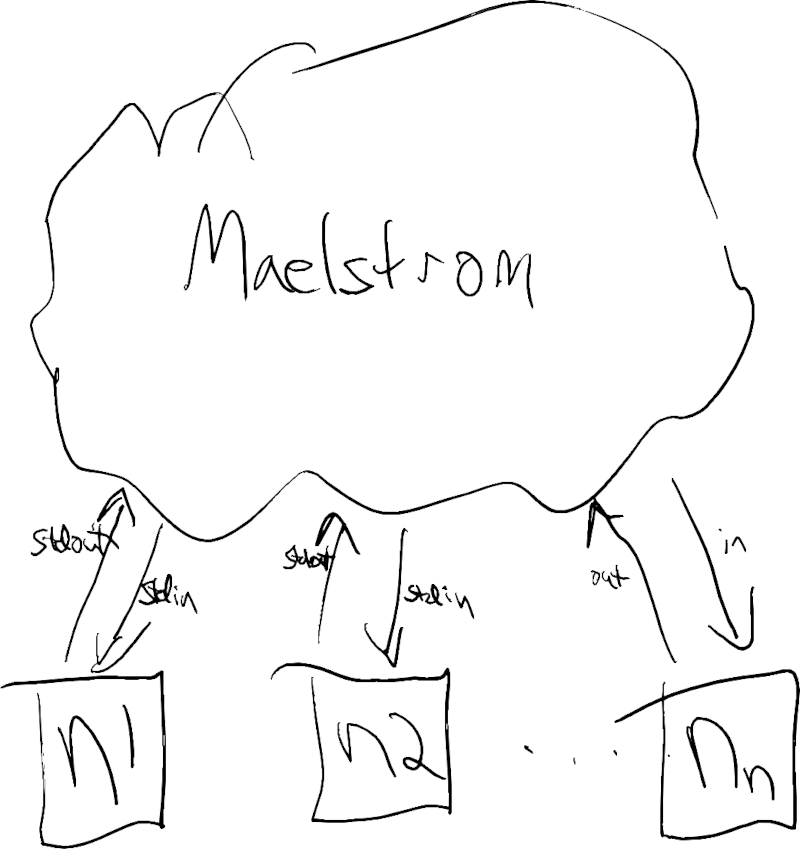

lets you build out a 'node' in your distributed system and Maelstrom will handle the routing of messages between the those nodes. This lets Maelstrom inject failures and perform verification checks based on the consistency guarantees required by each challenge.

Maelstrom itself is built on top of Jepsen2, a framework for testing

and verifying properties of distributed systems. Concretely, it's a program that you run, giving it

the path to a binary that implements the Maelstrom protocol, along with the name of a "workload"

meant to emulate particular distributed systems. It will then spawn a bunch of copies of the node

that you wrote and start passing it messages, listening for responses, using stdin and stdout as

the network medium:

Each challenge consists of writing a node that implements a single workload. There are six workloads in the Glomers challenges, though ten are available within Maelstrom.

The front page of the challenge goes on to say, "The documentation for these challenges will be in Go, however, Maelstrom is language agnostic so you can rework these challenges in any programming language." They provide packages for several different languages, including Rust. So, why something new?

I actually started out using the crate they include as a demo, maelstrom-node. However, it didn't take too long before I started chafing at it:

- there was a lot of boilerplate;

- each message type required its own custom data structure;

- it used async, which increased both he boilerplate as well as the compile times.

So after watching Jon Gjengset work through the first couple challenges while rolling his own Maelstrom support as he went, I decided to do the same, paying attention to the following characteristics:

minimal boilerplate

This is subjective, but the framework is not the solution, and should stay as much out of your way

as possible, while providing the most support. The maelstrom-node crate obligated quite a bit of

ceremony, as you can see from the difference between my "echo" client using it vs. my own. First

off, the client using the off-the-shelf crate:

#[tokio::main]

async fn main() {

let handler = Arc::new(Handler::default());

let _ = Runtime::new()

.with_handler(handler)

.run()

.await

.unwrap_or_default();

}

Now with my crate:

fn main() {

let node = Echo;

let runner = Runner::new(node);

runner.run(None);

Of course, when the code is that short, it's hard to appreciate the differences. But the first

version pulled in over over 60 dependencies; the second is half that due to not pulling in

Tokio. Also, the client is responsible for wrapping their node in an Arc, instead of letting the

runtime's

runner

take a generic parameter:

pub fn with_handler(self, handler: Arc<dyn Node + Send + Sync>) -> Self

vs

pub fn new<N: Node + 'static>(node: N) -> Self

Another source of boilerplate was the fact that the trait method for handling messages in the first version has the following signature:

async trait Node {

async fn process(&self, runtime: Runtime, req: Message) -> Result<()>;

}

Notably, &self is immutable, which means that if you need to keep any state in your node, you need

to wrap it in like Arc<Mutex<Vec<_>>, which means your client code is littered with like

// drop into a temp scope so guard is dropped after

{

let mut guard = self.store.lock().unwrap();

guard.borrow_mut().entry(key.to_string()).or_insert(val);

}

My own crate's analogous trait

method

receives as &mut self, so the above just turns into

self.store.entry(key.to_string()).or_insert(val);

which is 75% fewer lines.

My most complex client was the one for the kafka workload, at 158 lines; the simplest was for the

echo workload, at 21 lines. All in, the whole count for my Gossip Glomers challenges is:

$ wc -l */*/*.rs |sort -n

21 gg-echo/src/main.rs

29 gg-uid/src/main.rs

59 gg-g_counter/src/main.rs

141 gg-txn/src/main.rs

142 gg-broadcast/src/main.rs

158 gg-kafka/src/main.rs

550 total

weak typing/not for production

The Maelstrom protocol is

extremely simple. The physical

layer consists of stdin and

stdout as already mentioned. The wire format is newline-separated JSON objects:

{

"src": A string identifying the node this message came from

"dest": A string identifying the node this message is to

"body": An object: the payload of the message

}

The body is where all the real action is, however:

{

"type": (mandatory) A string identifying the type of message this is

"msg_id": (optional) A unique integer identifier

"in_reply_to": (optional) For req/response, the msg_id of the request

}

A body can also contain arbitrary other keys and values, and each workload has a different set of

them.

A lot of the crates I saw that offered Maelstrom support exposed types like Message<P>, where P

is a generic "payload" parameter for a Body<P>, and would usually be an enum with a specific variant

for each possible message type in the workload.

I totally get where they're coming from; that kind of strict and strong type discipline is very on-brand for Rust, and I'm often grateful for how expressive and supportive Rust's type system is.

But for this, which is a tinkertoy set for building toy distributed systems, I thought it was a bit much. My types look like this, with no generics3:

pub struct Message {

pub src: String,

pub dest: String,

pub body: Body,

}

In general, you don't worry about constructing a Message yourself in your handler, you construct a

Body using Body::from_type(&str) and Body::with_payload(self, Payload) builder methods, then

pass that to a send or reply method that adds the src and dest.

The Body is a little more involved, mostly because the Serde attributes double the line

count,

but:

pub struct Body {

pub typ: String,

pub msg_id: u64,

pub in_reply_to: u64,

pub payload: Payload,

// the following are for the case of errors

pub code: Option<ErrorCode>,

pub text: Option<String>,

}

Payload is a type alias for serde_json::Map<String, serde_json::Value>; it's decorated with

serde(flatten), which means, "when serializing, don't put a literal 'payload' field in the JSON,

just put the keys and values from it directly in the body". When a Body is being deserialized from

JSON, any unknown fields will be put into its payload.

The type of the Body, and hence the Message according to the Maelstrom protocol, is just a

string. When writing your clients, you only have a few possible message types to consider, and

it's easy and clear to just use bare strings in the places where they're needed.

I also deliberately ignore whole classes of errors and liberally unwrap() things like channel

send()s and mutex lock()s, because those kinds of errors are out of scope for Maelstrom. If you

can't get a handle to stdin, there's nothing you can do, so might as well assume its infallible.

no async/minimal dependencies

I've already mentioned this, so no real need to go over it again. Async is nice when you need it,

but when you don't, it's just more noise. nebkor-maelstrom has only three external deps (which have

their own dependencies, so the full transitive set is 10x this, but still):

[dependencies]

serde_json = "1"

serde = { version = "1", default-features = false, features = ["derive"] }

serde_repr = "0.1"

simple to understand

Finally, I wanted it to be as clean and minimal as possible. The async maelstrom-node

crate4, for example, is extremely thorough and featureful, but is well over a

thousand lines of fairly complicated code. nebkor-maelstrom, by contrast, is less than 500 lines

of extremely straightforward code:

$ wc -l src/*.rs

81 src/kv.rs

229 src/lib.rs

167 src/protocol.rs

477 total

Something that gave me particular trouble was how to handle RPC, that is, sending and receiving

messages while already handling a message. I faffed around trying to get something with callbacks

working, and then I finally found this project,

"Maelbreaker" (note the cool logo!), and copied its IO

system,

which used multi-producer/single-consumer channels to send messages with stdin and stdout from

separate threads5. I also stole their idea to use MPSC

channels

as the method for getting values returned to the caller while the caller is handling a message already.

Wrapping up

And here we are! All in all, I probably spent about twice as much time writing nebkor-maelstrom as

I did solving the Gossip Glomers challenges, though partly that's due to the chores associated with

publishing software that other people might plausibly use, and trying to be reasonably decent about

it; things like writing examples and documentation and reviewing it for quality. I also enjoyed

writing it in tandem with the exercises; every bit of functionality was directly motivated by acute need.

So, if you're thinking about tackling the challenges, and want to do them in Rust, I humbly suggest

checking nebkor-maelstrom out; it's only a cargo add away!

-

My partner could never remember the name of them until she came up with "glome-ing the cube". ↩︎

-

Jepsen is named after Carly Rae Jepsen (this is real), who sang the song, "Call Me Maybe", which is obviously about attempting to communicate in the face of network partition. ↩︎

-

There is one function that takes a generic parameter,

Runner::new<N>(node: N), but there are no generic data structures (dyn Nodeis not generic, it's dynamic). ↩︎ -

I swear I'm not picking on that crate, it's just a good contrast and I have direct experience using it. ↩︎

-

I was able to simplify the IO even more, from spawning four separate threads to two, and likewise cutting the number of MPSC channels in half. This is solely because I took the time to revisit the design and consider what could be redundant; I'm incredibly grateful to the Maelbreaker author for sharing their work, and I emailed them to let them know that (and they were glad to hear it!). ↩︎